Parallel Agents + Smart Model Selection in Cursor

A battle-tested system for shipping faster and spending less

One of the biggest surprises in building MVPs with AI copilots is how easy it is to burn money without realizing it. The choice of tool matters far more than people assume, and optimizing your setup matters just as much as picking the right model. This post walks through the configuration that has worked for me, the rules I use to control drift, and the workflow that lets me ship quickly without runaway costs.

Why I Recommend Cursor

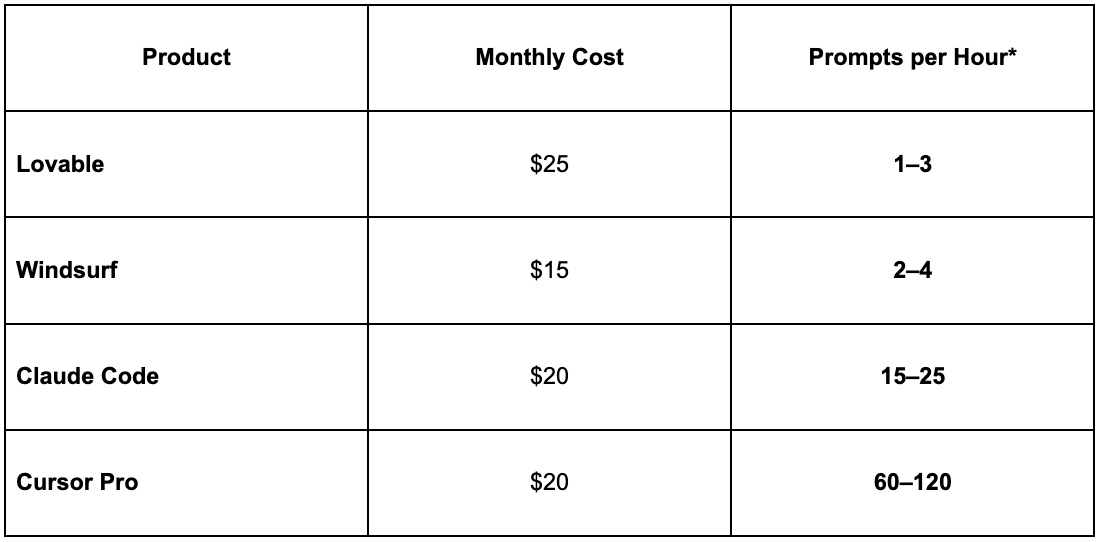

Most AI coding tools look similar on paper, with monthly plans ranging from fifteen to twenty five dollars. In practice, what you get for that price varies dramatically. Cursor has consistently delivered the strongest value for me in terms of usable throughput and cost control.

*Prompt counts are based on my real usage and observed tool limits.

Why Model Selection Matters

My Cursor usage report showed more than $420 of model value consumed in a single month. Thanks to bundled credits I paid far less, but the distribution was revealing.

Auto mode escalated to more expensive models far more often than expected

Codex handled heavy tasks even when the work did not require it

Thinking modes multiplied cost by five to fifteen times

This pushed me to rebuild my workflow around a simple idea: match the model to the task. Once this principle was applied intentionally, my costs dropped, my builds stabilized, and throughput increased.

The Three Model System

These are the only models I keep enabled in Cursor. Everything else is turned off.

1. Claude 4.5 Sonnet (regular)

My primary model, used for about eighty percent of all work. Ideal for:

feature development

debugging

planning

architecture and system discussion

single file refactors

documentation

frontend and backend glue logic

Sonnet 4.5 provides the best balance of intelligence, cost, and stability. It handles most work predictably and with minimal drift.

2. GPT 5 (standard)

I use GPT 5 when I need more structure or clarity, especially when:

Sonnet gives an ambiguous response

type systems get complex

backend logic needs precision

algorithms require clean reasoning

I want explicit chains of thought without triggering costly thinking modes

GPT 5 is a reliable complement to Sonnet.

3. GPT 5.1 Codex

Reserved for premium, multi file, or coherence heavy tasks:

complex refactors

React tree rewrites

schema and service migrations

cross module changes

deep TypeScript coordination

Codex is powerful but easy to overspend with. I use it intentionally and only for work where it clearly outperforms the lighter models.

How I Enforce Good Model Selection

I use a lightweight Cursor rule that adds a small header before each response. For example:

[model-check] task=SINGLE_FILE_FEATURE tier=STANDARD note=Codex not required

This keeps model choice visible and encourages intentional routing. It is not restrictive. It simply reduces unconscious escalation and helps maintain consistency across sessions and agents.

The Five Rules Behind My Workflow

The model system above is only one part of the framework. The entire playbook follows five simple principles that guide how I work with LLMs.

1. Architecture first

Start with clear data flow, boundaries, and ownership. Strong architecture helps both humans and LLMs understand the system.

2. Keep it clean, simple, and DRY

LLMs amplify whatever structure they are given. Clean patterns, consistent file shapes, and minimal duplication produce better generations.

3. Think full stack

AI copilots perform best when they can reason across frontend, backend, and data layers at once. Treat the system holistically.

4. Plan intentionally

Small, well scoped tasks produce higher quality output. I rely on deliberate planning, tight acceptance criteria, and parallelization to move quickly without creating thrash.

5. Match the model to the task

Use the lowest cost model that can produce the required result, and escalate only when the problem demands more power. This is the foundation of model economy and cost control.

These principles support the rules in my Cursor configuration and influence every part of the workflow below.

Download the Rules

Add the .mdc files directly into your .cursor folder:

https://github.com/ycb/cursor-ai-coding-playbook

Or copy the rules from Google Sheets:

https://docs.google.com/spreadsheets/d/1E2VTe15m16ahJkYaUkUvCBzdy–jRQcvehYdw6Sm92E/edit?usp=sharing

Parallel Agents: How I Build Four to Five Times Faster

The next breakthrough came from treating Cursor’s parallel agents as first class workers rather than a single assistant. I break sprints into independent tasks and run agents simultaneously, each with a narrow mission and clear acceptance criteria. This dramatically increases throughput without creating chaos.

1. Use GPT 5.1 Codex once for sprint planning

Codex is excellent at decomposing problems into:

isolated subsystems

independent tasks

realistic sequencing

clear acceptance criteria

I provide scope and constraints. It produces a coherent plan for the entire sprint.

2. Assign each agent a focused mission

Each agent owns a subsystem. For example:

branch synchronization and test restoration

draft creation pipeline hardening

match intelligence and top level metrics

streaming response integration

Narrow scopes lead to predictable output.

3. Run agents with Sonnet by default

All agents run on Claude Sonnet 4.5 unless the model check rule recommends a higher tier. Only complex refactors trigger Codex.

4. Use browser automation for QA

When an agent claims work is ready, I run:

You have access to browser automation. Credentials are in .env. Validate E2E.

The agent executes a full walkthrough, checks state transitions, and confirms no regressions. This replaces most of my manual QA.

5. Use a cheap model for small commits

Renames, comment updates, and small refactors go to Claude Haiku or GPT 4o mini. These tasks require speed, not intelligence.

Closing Thoughts

Combining deliberate model selection, a small set of rules, parallel agents, and automated QA has reshaped how I build software. Throughput increased significantly, costs became predictable, and my codebase stayed consistent even with multiple agents contributing in parallel.

If you are building with Cursor, Claude, or GPT 5 this year, these patterns will help you move faster, spend less, and reduce drift.